使用一下协程,感受一下协程的魅力,hyperf协程加速 ,加速一个爬虫程序,仅供交流学习请勿用于商业用途

有时候跨服务器调用另一个表内容时,或者有些喜欢的视频图片等内容很好但是不能批量下载,网上有许多软件获取app的请求接口的软件,通过软件解析接口地址,大部分已json形式返回,如何用php调用外部接口json数据?

获取方法

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

//返回数组

}调用获取方法

<?php

declare(strict_types=1);

/**

* This file is part of Hyperf.

*

* @link https://www.hyperf.io

* @document https://hyperf.wiki

* @contact group@hyperf.io

* @license https://github.com/hyperf/hyperf/blob/master/LICENSE

*/

namespace App\Controller;

use QL\QueryList;

use Hyperf\DbConnection\Db;

class VideosController extends AbstractController

{

public function getvideos(){

$url = "http://81.70.181.238/%2Fhot_video%2FgetList?page=".$i."&limit=30";

$eq=$this->getapktj($url);

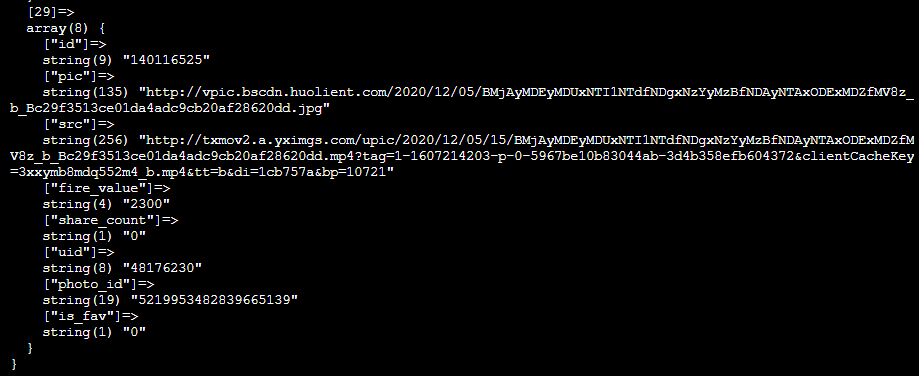

$res=$eq['data']['list'];

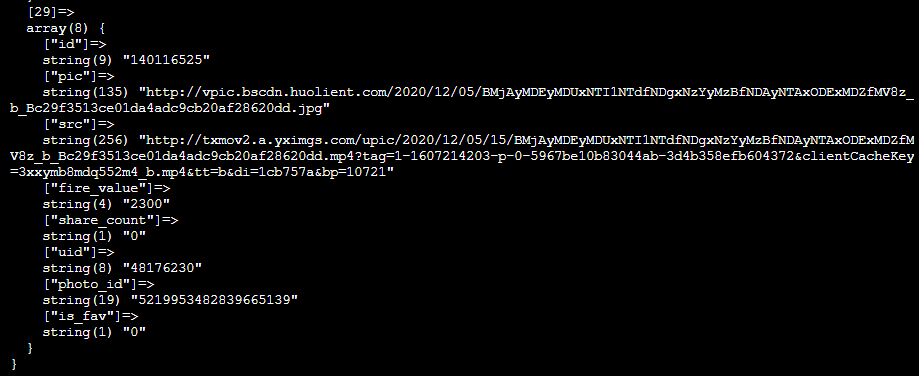

var_dump($res);

}

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

}

}

100页数据无协程只爬取数据,不插入数据库

<?php

declare(strict_types=1);

/**

* This file is part of Hyperf.

*

* @link https://www.hyperf.io

* @document https://hyperf.wiki

* @contact group@hyperf.io

* @license https://github.com/hyperf/hyperf/blob/master/LICENSE

*/

namespace App\Controller;

use QL\QueryList;

use Hyperf\DbConnection\Db;

class VideosController extends AbstractController

{

public function getvideos(){

$time=time();

for ($i=800;$i>=1; $i--){

echo($i.PHP_EOL);

$url = "http://81.70.181.238/%2Fhot_video%2FgetList?page=".$i."&limit=30";

$eq=$this->getapktj($url);

$res=$eq['data']['list'];

$data=array();

if(!empty($res)){

foreach ($res as $re){

$r['title']="";

$r['coverpath']=$re['pic'];

$r['videopath']=$re['src'];

$r['created_at']=time();

$data[]=$r;

}

Db::table('videos')->insert($data);

}

}

var_dump(time()-$time);

}

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

}

}

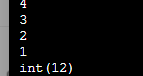

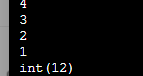

耗时12秒

协程优化(100页一眨眼感觉不到800页数据爬取与储存插入为什么800页这借口目前800页)

修改config/autoload/server.php

‘settings’中加入

//使curl和db阻塞函数一键协程化 无效是看看swoole版本对不对

'hook_flags' => SWOOLE_HOOK_ALL | SWOOLE_HOOK_CURL,之后加入协程代码

<?php

declare(strict_types=1);

/**

* This file is part of Hyperf.

*

* @link https://www.hyperf.io

* @document https://hyperf.wiki

* @contact group@hyperf.io

* @license https://github.com/hyperf/hyperf/blob/master/LICENSE

*/

namespace App\Controller;

use QL\QueryList;

use Hyperf\DbConnection\Db;

class VideosController extends AbstractController

{

public function getvideos(){

$time=time();

for ($i=800;$i>=1; $i--){

co(function () use ($i) {

echo($i.PHP_EOL);

$url = "http://81.70.181.238/%2Fhot_video%2FgetList?page=".$i."&limit=30";

$eq=$this->getapktj($url);

$res=$eq['data']['list'];

$data=array();

if(!empty($res)){

foreach ($res as $re){

$r['title']="";

$r['coverpath']=$re['pic'];

$r['videopath']=$re['src'];

$r['created_at']=time();

$data[]=$r;

}

Db::table('videos')->insert($data);

}

});

// var_dump($data);

}

// var_dump(time()-$time);

}

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

}

}

测试很多次800页 5000左右数据爬取与储存耗时3秒以内

这篇文章没有解决 Allowed memory size of 268435456 bytes exhausted,内存溢出问题,可以按这个下面这篇文章思路做适当修改藏羚骸的博客~hyperf协程免费查询快递物流.思路就是加一个协程等待, 协程一次执行一百和协程,等待一百协程执行成功后在执行下一个一百协程。

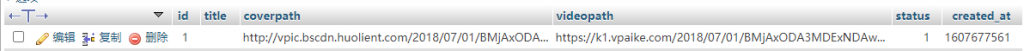

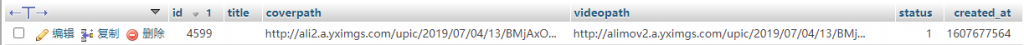

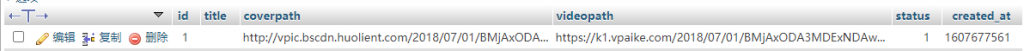

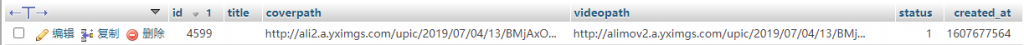

有数据就可以衍生出一个马甲网站:

使用一下协程,感受一下协程的魅力,hyperf协程加速 ,加速一个爬虫程序,仅供交流学习请勿用于商业用途

有时候跨服务器调用另一个表内容时,或者有些喜欢的视频图片等内容很好但是不能批量下载,网上有许多软件获取app的请求接口的软件,通过软件解析接口地址,大部分已json形式返回,如何用php调用外部接口json数据?

获取方法

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

//返回数组

}调用获取方法

<?php

declare(strict_types=1);

/**

* This file is part of Hyperf.

*

* @link https://www.hyperf.io

* @document https://hyperf.wiki

* @contact group@hyperf.io

* @license https://github.com/hyperf/hyperf/blob/master/LICENSE

*/

namespace App\Controller;

use QL\QueryList;

use Hyperf\DbConnection\Db;

class VideosController extends AbstractController

{

public function getvideos(){

$url = "http://81.70.181.238/%2Fhot_video%2FgetList?page=".$i."&limit=30";

$eq=$this->getapktj($url);

$res=$eq['data']['list'];

var_dump($res);

}

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

}

}

100页数据无协程只爬取数据,不插入数据库

<?php

declare(strict_types=1);

/**

* This file is part of Hyperf.

*

* @link https://www.hyperf.io

* @document https://hyperf.wiki

* @contact group@hyperf.io

* @license https://github.com/hyperf/hyperf/blob/master/LICENSE

*/

namespace App\Controller;

use QL\QueryList;

use Hyperf\DbConnection\Db;

class VideosController extends AbstractController

{

public function getvideos(){

$time=time();

for ($i=800;$i>=1; $i--){

echo($i.PHP_EOL);

$url = "http://81.70.181.238/%2Fhot_video%2FgetList?page=".$i."&limit=30";

$eq=$this->getapktj($url);

$res=$eq['data']['list'];

$data=array();

if(!empty($res)){

foreach ($res as $re){

$r['title']="";

$r['coverpath']=$re['pic'];

$r['videopath']=$re['src'];

$r['created_at']=time();

$data[]=$r;

}

Db::table('videos')->insert($data);

}

}

var_dump(time()-$time);

}

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

}

}

耗时12秒

协程优化(100页一眨眼感觉不到800页数据爬取与储存插入为什么800页这借口目前800页)

修改config/autoload/server.php

‘settings’中加入

//使curl和db阻塞函数一键协程化 无效是看看swoole版本对不对

'hook_flags' => SWOOLE_HOOK_ALL | SWOOLE_HOOK_CURL,之后加入协程代码

<?php

declare(strict_types=1);

/**

* This file is part of Hyperf.

*

* @link https://www.hyperf.io

* @document https://hyperf.wiki

* @contact group@hyperf.io

* @license https://github.com/hyperf/hyperf/blob/master/LICENSE

*/

namespace App\Controller;

use QL\QueryList;

use Hyperf\DbConnection\Db;

class VideosController extends AbstractController

{

public function getvideos(){

$time=time();

for ($i=800;$i>=1; $i--){

co(function () use ($i) {

echo($i.PHP_EOL);

$url = "http://81.70.181.238/%2Fhot_video%2FgetList?page=".$i."&limit=30";

$eq=$this->getapktj($url);

$res=$eq['data']['list'];

$data=array();

if(!empty($res)){

foreach ($res as $re){

$r['title']="";

$r['coverpath']=$re['pic'];

$r['videopath']=$re['src'];

$r['created_at']=time();

$data[]=$r;

}

Db::table('videos')->insert($data);

}

});

// var_dump($data);

}

// var_dump(time()-$time);

}

//跨服务器高级用法

public function getapktj($url){

$method ="GET";

$curl = curl_init();

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, $method);

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_FAILONERROR, false);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_HEADER, false);

$ret = curl_exec($curl);

$all=json_decode($ret,true);

return $all;

}

}

测试很多次800页 5000左右数据爬取与储存耗时3秒以内

这篇文章没有解决 Allowed memory size of 268435456 bytes exhausted,内存溢出问题,可以按这个下面这篇文章思路做适当修改藏羚骸的博客~hyperf协程免费查询快递物流.思路就是加一个协程等待, 协程一次执行一百和协程,等待一百协程执行成功后在执行下一个一百协程。

有数据就可以衍生出一个马甲网站:

pjax22

pjax23

pjax1

你哈

pjax